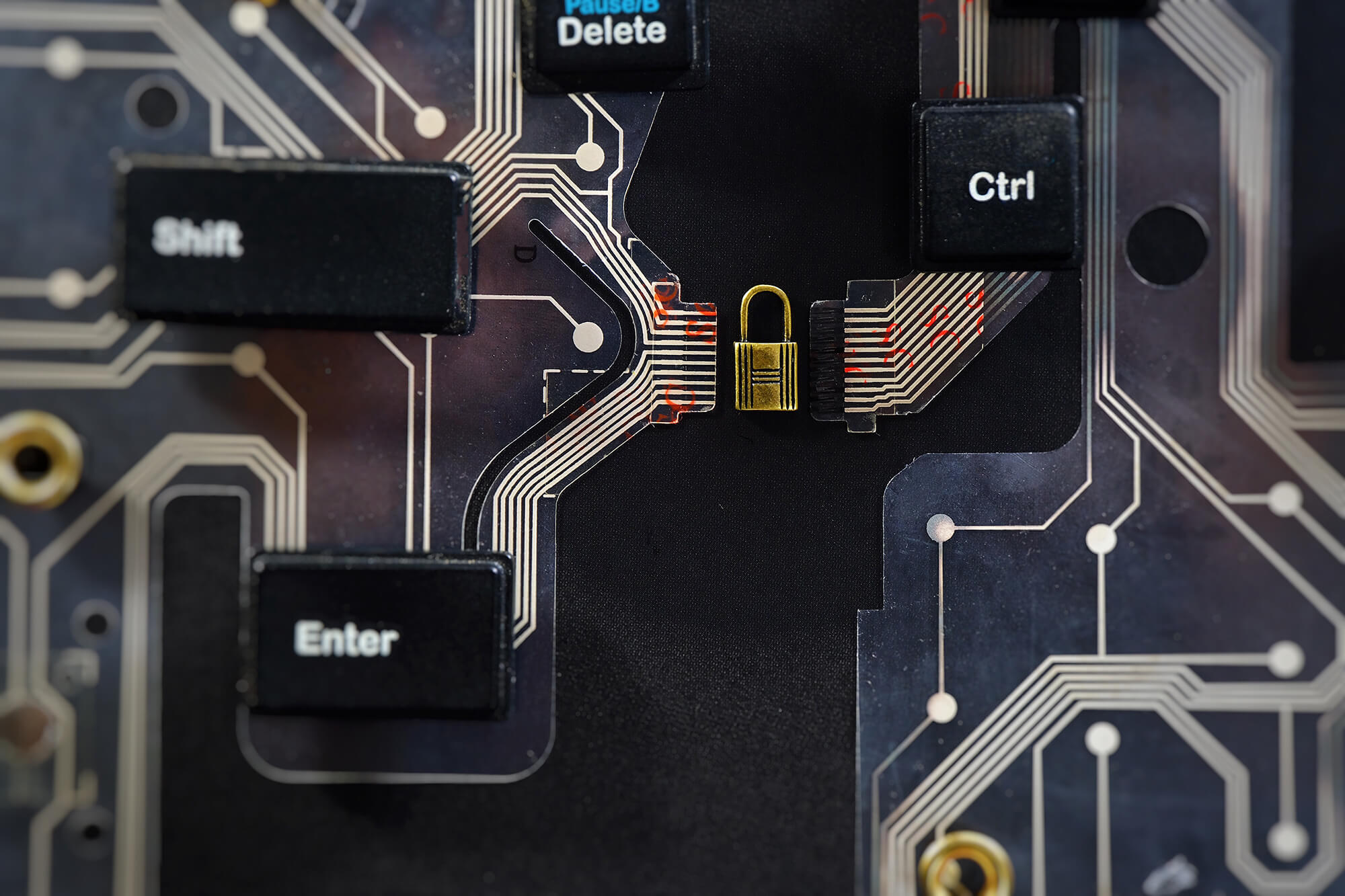

Welcome to the third part of our series on how you are giving away information for free online. All day every day we are interacting digitally with something, and every interaction is feeding into multiple algorithms that are being used to gather information about you, primarily for the purpose of serving you more ads. We’re going to learn a couple of new ways in this article and go a bit more in depth on a couple of others.

One of those additional ways is through third-party software, particularly through the use of Application Programming Interfaces (APIs). These are extremely handy tools that developers can use to customize various pieces of software or even develop their own. Though in reality, the APIs allow people not so much to develop their own software as stitch together various pieces of software into different combinations. Embedded in many of these APIs is code that will feedback your information to the parent company or to other aggregators in order to better advertise to you.

Last time, we talked about how various companies are always tracking your location. Yet, we didn’t talk much about how they do it. Everyone is of course familiar with how nearly every app on their phone asks them to share location data. That happens by accessing your phone’s GPS data. You can of course deny access and even turn off your device’s GPS feature. However, does that really protect you from being tracked? Well, it makes it harder at least. In truth, the more sophisticated companies can figure your location with a high degree of accuracy by seeing how long it takes your signal to bounce back from nearby cell towers. It’s simple triangulation.

We also mentioned photos last time. How do companies use that against you? Well, if you have kids and share pictures of them online, you can bet you are going to get advertisements for toys, kids’ clothes, and family vacations. Naturally, all of that will be cross-referenced with every other bit of information that you’ve shared, ensuring you’ll not only get ads for Disney World but the specific parks within that are most likely to appeal to you. If there are pictures of friends on your social media don’t be surprised if you get some of the same ads since those people are included in the incessant cross-referencing.

That reminds me; stop sharing so much. So many people share so many details online unnecessarily that it is possible to put together a decent biography on them just by gathering all those details. You have to be very conscious of this since even people who think they are being careful will let bits and pieces drop over time.

Stop letting them collect so much data on you. Go into your settings and disable personalized advertising, turn off your location sharing, app access to your contact lists, get a VPN, stop sharing everything on social media. All you’re doing is letting these companies sell you things you probably don’t need or even want and helping them shove you into a box. In a way, this is the most nefarious aspect of the whole thing, they’ll make sweeping assumptions about you based on a few tidbits of information and instantly categorize you in a way that may or may not be legitimate. People are more complicated than what can be captured by an algorithm. Someone might both enjoy trap shooting and drive a Prius. Or another person might enjoy reading philosophy in a coffee shop sipping on a latte and then go home and watch John Wick.

It’s time people broke out of these boxes that mega corporations have been trying to force them into. Fortunately, TARTLE exists to help people do exactly this. With us, you can get out of the algorithms and take back control of your digital destiny.

What’s your data worth? Sign up and join the TARTLE Marketplace with this link here.

All day every day we are interacting digitally with something, and every interaction is feeding into multiple algorithms that are being used to gather information about you, primarily for the purpose of serving you more ads. We’re going to learn a couple of new ways in this article and go a bit more in depth on a couple of others.

Speaker 1 (00:07):

Welcome to TARTLEcast with your hosts Alexander McCaig and Jason Rigby. Where humanity steps into the future and source data defines the path.

Alexander McCaig (00:27):

Hello, everyone. Welcome back to TARTLEcast. This is Part Three of our episode of 15 Ways to Die Online. No, it's 15 Ways you're Giving Social Media Companies Your Data.

Jason Rigby (00:37):

I want to get into this. We're on the tail end of it. User surveys steer sites to you. The other day I was on YouTube and a survey popped up all of a sudden. And it asked me, "Would you like Walmart, Costco, Target? You don't ask me. Of course, I clicked Costco.

Alexander McCaig (00:54):

Yeah.

Jason Rigby (00:54):

And then it went to my video.

Alexander McCaig (00:56):

No, I know. And so what will happen with that is now they've traced the IP with your computer and you've given them a survey on your preference.

Jason Rigby (01:03):

Mm-hmm (affirmative).

Alexander McCaig (01:04):

Now that you've done that, they're going to start dishing you up ads. And, first of all, Walmart has to pay Facebook or YouTube or whoever it is to dish you up that ad. So they're like, "Well, now we know exactly who to give it to." So now it's going to be, "Let's channel Jason to this website." Or we had another user survey. If you buy a Mac and you finish the whole checkout process, then a thing from Qualtrics shows up saying ... asking you about your experience. "Frankly, I don't even give a shit. I wanted to buy the Mac and be done with you. I just spent thousands of dollars on this thing. I don't want to give you any more of my time." You know what I mean?

Jason Rigby (01:39):

Right. Yeah, exactly.

Alexander McCaig (01:40):

What's the benefit for me filling out the survey? I don't even want to go through it. Or you're on Wayfair. Okay? And say, they want to bring you to a new part of the site or issue a new coupon. Right? Or some sort of discount thing. Bring you to a new splash page. We were looking at this table we ordered for the studio, and it was totally unclear when this thing's going to get dropped off. And it has a survey over here on the right. Tell us about this experience. Right? "Lacks accuracy", all these other things. So now they know, great, we have your feedback. Now we know how to deliver you a coupon code or bring you to a new URL, whether it's through email marketing or direct marketing right here on the page.

Jason Rigby (02:14):

Yeah. That Wayfair ... You guys got to figure out your shipping. [crosstalk 00:02:18] I can almost go to the store here and grab something.

Alexander McCaig (02:21):

We could have.

Jason Rigby (02:21):

The pricing's great. It's cool to be able to order online, but-

Alexander McCaig (02:24):

I could have gone to a Target or an Ikea if there was one of in Mexico-

Jason Rigby (02:28):

Yeah but this thing ... We're waiting over a month?

Alexander McCaig (02:30):

Yeah. For a table?

Jason Rigby (02:31):

For a table. Yeah. Their whole shipping system-

Alexander McCaig (02:35):

Yeah, they've got to figure that out.

Jason Rigby (02:36):

So user surveys steer sites to you. Next, is making money from third-party software. We've never talked about this before, have we, Alex?

Alexander McCaig (02:44):

No. So third-party software. A lot of them are APIs. So it's a programmable interface that people put into their programs or websites or applications. And through that, it's essentially like someone is leasing their app. Like, I'll lease you the real estate on my app to bring your API in, so that you can deliver some sort of content or whatever it might be to that individual, and they get a stream of benefits from it. So you're used through someone's third-party software in a system that you're already using. You're not only giving it to that person who created the app or the website, but you're also giving information off to these other individuals that are tied in with it.

Alexander McCaig (03:24):

So your data is getting split in multiple different directions and everybody's benefiting from you. "Oh, I'm going to give you a couple shekels, you a couple, you a couple" or whatever-

Jason Rigby (03:32):

Everybody gets a new car!

Alexander McCaig (03:33):

And I look in my pocket, I got no shekels left. So you got to be cognizant of what is going on here. It's great to see all these systems put together, but when people build apps, a lot of them are just stitching together things that are already pre-built to make this [crosstalk 00:03:48] But they don't own it. So it's just dishing off into all these different areas.

Jason Rigby (03:53):

Next, is you're being trangulated.

Alexander McCaig (03:56):

Yeah, triangulated.

Jason Rigby (03:56):

Triangulated.

Alexander McCaig (03:59):

If they can't get your GPS location, because you said, "I don't want to give you my location data," here's what they do. They can look at what cell phone tower it's going through, how it's routed. If you're touching maybe two or three or five or 10 cell phone towers at once ... All you need is three points to get an area of where someone is. That's how triangulation works. Or, if we do it with multiple wifi routers ... Okay, I can see that their phone has pinged this IP address at this router, this one here, and this one here. We know where they are in a local area.

Alexander McCaig (04:30):

And your Comcast hotspots? You know the ones they put around? You're like, "Oh, I feel so good about this. I have internet walking around major city." They're just following you everywhere you walk. And what this does is it develops a heat map for Comcast. And Comcast and see all the traffic. They can see everything that's going on. They know exactly how to then dish that location information that's been triangulated back to these other companies. And the social media companies? They're going to know what IP address you're doing that access from. So, regardless, they're going to know exactly where you are.

Jason Rigby (04:58):

Next, letting social networks use your photos.

Alexander McCaig (05:02):

Oh, yeah. So the second you put a photo online ... And maybe you get some sort of pop-up on Facebook that says, "Oh, your friend is on here." That's essentially an advertisement to you, or to your friend using your photograph. Or vice versa. So they're using the content that you're putting up there to then go remarket to other people that aren't connected to you. And when they do connect, now they have a connection of data points. So they can start looking at correlations and then your behaviors between one another. And then that network starts to grow. And it's very beneficial to them to do so. But they're using your content that you're putting up on their servers. If you actually own it, you'd be the one that will control that notification going to that person. Whether or not they can see that photo for that notification. But Facebook just does it on their behalf all the time. Not to bash on them, but many other companies do the same thing.

Jason Rigby (05:51):

Next, oversharing details. Just what we just talked about.

Alexander McCaig (05:54):

Yeah. This is essentially the same thing. We overshare. Not only do you have metadata on the photos, then we talk about the photos. People explain their story on it. People fight about it. Things become political on it, whatever it might be. It's so much sharing that is happening on this, through the details and people sharing and resharing the same thing. You're just feeding them all day long. It's like, "Here's more cash. Here's more stuff on me. Here's a bigger profile on me. Keep dishing me more stuff all the time." You're doing all the legwork for them.

Jason Rigby (06:24):

This one I think is really important and you've talked about VPNs and stuff like that recently. But check your settings. We're always so quick to not even go into our settings. And we're so quick to just say, okay, privacy agreement, click, click. Okay, now I'm onto the site.

Alexander McCaig (06:38):

Yeah. Disable personalized advertisements. Okay? When you go on there, you'll see, even with Google, you have your own little serial number, for you. And then they use that. They're like, "Oh, well, we want to deliver you the most relevant ads." No. "The more targeted you can deliver me an ad, is a higher price point you can charge to the company that wants that ad out there."

Jason Rigby (07:00):

Mm-hmm (affirmative).

Alexander McCaig (07:01):

"Don't act like it's for my benefit." The more rifle-focused or bullseye it is, the higher the premium. Disable that stuff in your settings. Add to 2-FA. See how they track you. Don't share your location, get rid of all of that stuff. Don't allow them to send you a specific emails to your thing, because then they're seeing, okay, you've received the email. Oh, and you also opened it. They're also tracking that. There's all these different things you need to be like, "Okay, what is it I actually want to receive? And, what am I giving up?" And you need to be cognizant of those factors. You have to understand that everything is tracked.

Alexander McCaig (07:37):

And anything that's processed on a server is logged. Logs are the key of life, on the internet, because if you don't have it, there's no traceability. But every single thing has a log, except for some VPN providers that don't have logs, because they're all about anonymity.

Jason Rigby (07:53):

The last one. Are you ready? This one's going to get you fired up.

Alexander McCaig (07:55):

I am ready.

Jason Rigby (07:56):

This is going to get you fired up. Are you ready, Alex?

Alexander McCaig (07:58):

Yes.

Jason Rigby (07:59):

Last one. Letting yourself be grouped.

Alexander McCaig (08:03):

Oh, my gosh!

Jason Rigby (08:05):

I figured we'd end on a high note here. Here's the fireworks.

Alexander McCaig (08:07):

Here we go. So this is the most upsetting thing ... is that, we are letting companies, say, 1% of the world, define what the 99% looks like. And I've used this metaphor before. Jason, I'm not going to tell you how to dress. I'm not going to tell you to look or feel what tattoos to put on your body. I'm not going to tell you how to think. Am I?

Jason Rigby (08:29):

No.

Alexander McCaig (08:29):

That's your choice. So why are you letting how you identify yourself, be put into a group by a couple of people in a small office with an algorithm, to say that you belong in this box? Put me in a box. Only until recently I had the longest hair in the world. I drove a Subaru. I enjoy trapshooting, flying planes. I'm a vegan. But it's like, am I conservative, am I liberal? I still go to Cabela's. Right? But I like animal protection. What put me in that box? You want to define me for who I am? When's the last time you enjoyed someone making a blanket statement about you? Never! And the only reason they do this, and they have the ability to do this, is because you've given them the power to do so. You've relinquished all of your power over all your data. Go ahead, do it for me. And now look. Someone's saying this is who you are as a human being.

Jason Rigby (09:19):

And we talked about this off air, when we were talking about inclusion.

Alexander McCaig (09:23):

Yeah.

Jason Rigby (09:23):

And I thought it was so interesting how these algorithms, these machine learning patterns, that they've developed is not any of that. Their philosophy is to group, to segregate, to even go off of ... Some of these algorithms are either ... They're racist.

Alexander McCaig (09:46):

A lot of them are racist, because it takes in the predefined biases that the person writing the algorithm has in place. People don't understand. The second you start to put things within a box, you begin immediate segregation. You as a data company, or whoever you might be, political research, from a hospital ... You should not define those boxes. People need to define their own boxes. We define our own limits. We know ourselves better than you know us. It's not for you to say that you know, better because you've analyzed us so much. In the end, you really have no freaking clue. So the entire model, the industry needs to be inverted. And that's a function of us taking responsibility for our own data and saying, "This is who we are." Okay? Not for an algorithm to say this is how I should be defined.

Jason Rigby (10:31):

Well, it's just so aggravating too. I was talking to a friend the other day and he was raised in Oakland, in a very bad part of a neighborhood there. And he's white, but he doesn't identify as being white. And, I know there are a lot of people that are out there right now, all over the world, that listen to this, and they're in that same agreement. "Don't identify me as this. I'm not that."

Alexander McCaig (10:55):

I know what I am. It's for me to tell you who I am.

Jason Rigby (10:59):

And so, whenever we're getting into ethnicity, we're getting into sexual preferences, we're getting into all of these. Now, we have an issue.

Alexander McCaig (11:07):

Yeah. And they collect all those.

Jason Rigby (11:08):

Yes.

Alexander McCaig (11:09):

And then they put you in a bucket.

Jason Rigby (11:10):

Yes.

Alexander McCaig (11:11):

And then they target you. All these major marketing guys coming out, whoever the leaders are, the forward-thinkers? They talk about inclusion. Everything you're doing is non-inclusive. When you as a marketing firm go to these other companies as a third party and tell them how to market, you're only telling them how to better put people in a bucket. What's inclusive about that? You're being inclusive, because we created more buckets? Nothing about that, of that inclusion model, gives power back to the individual. We decide to be a part of groups. We decide to join Tartle. We decide to drive our own cars. Not you telling me to get in the car and go do this. I'm making all of those choices. So don't come up with some hypocritical statement to say that, "I'm a marketing executive from a big old firm and we're talking inclusion."

Jason Rigby (11:53):

Yeah. Just because it's the key buzzword for 2021.

Alexander McCaig (11:56):

Yeah. You're not doing it. You're still putting people in funnels, boxes, predefined things, aggregating all this data and saying this is how people should be.

Jason Rigby (12:03):

Yeah. And until you can go to your clients and look at them and say, "The content that we're going to create is going to elevate humanity, and the product that you have has the ability to do that. So we're in."

Alexander McCaig (12:16):

Yeah. "Oh, by the way, we ethically sourced all the information and we didn't come up with it ourselves. We acquired it from the people that generate it."

Jason Rigby (12:22):

Yeah. But I tell people it's so funny. As long as you're in an S&P 500 ETF or whatever, investing, I guarantee you're investing ... Whether it's Phillip Morris or somebody like that, I guarantee you're investing against the world being a better place. So, you're putting your money-

Alexander McCaig (12:42):

Prove it. Prove to me you're doing something for the better.

Jason Rigby (12:45):

Yes.

Alexander McCaig (12:46):

All I know is that Tesla's doing a great job. The only person who's super public and has that much clout, and that's really trying to unify and say, "I'm trying to fix some stuff," looks like to be him. GE's a big firm, but does GE really have a consciousness? I don't know. They have 30 different things they own underneath their umbrella. Maybe more.

Jason Rigby (13:08):

Well, the statistics we've talked about is where ... What was it? 17, 18% of the people think that corporations are doing a bad job.

Alexander McCaig (13:18):

Yes. It's 16%. Yeah. And 65% of corporations feel that they're doing the right thing. They don't actually know they're doing the right thing. Why? Because they've never asked anybody.

Jason Rigby (13:26):

Yes.

Alexander McCaig (13:26):

I know GE builds a lot of wind turbines.

Jason Rigby (13:29):

Yes.

Alexander McCaig (13:29):

But who decided where those went? The power company, so they can get a carbon-tax credit? Or did the people say, "We want these here." Hello? You know what I mean? So the power spectrum is just totally skewed. There's no balance to it. And we're not trying to fight the other side, we're just trying to build a bridge, because the bridge has to balance on both sides so we can both traverse it properly.

Jason Rigby (13:52):

Yeah. And this is a question I think we've all looked at and something that we always look at. Would you put humanity first, above profit?

Alexander McCaig (14:02):

That's probably a hard question for them, especially when their founding documents don't even say that they put humanity first. We're here for the shareholders, not the stakeholders. We're here for shareholders. We're here to deliver shareholder value. We're here to decrease that liability to those people that are funding into this. And it's strictly an economic cycle.

Jason Rigby (14:25):

When you're using the word "inclusion", and then you're pulling lithium out of Africa. You know what I mean? We could go on and on with this. We have the Big Seven at Tartle. You're willing to destabilize the climate.

Alexander McCaig (14:43):

I'm going to call it a hypocrite when I see a hypocrite. Right?

Jason Rigby (14:46):

Oh, if these people [inaudible 00:14:48] educational access ... What's that?

Alexander McCaig (14:49):

Yeah.

Jason Rigby (14:53):

Public health?

Alexander McCaig (14:54):

There's profit to be made for some people by keeping people segregated. There's profit to be made in certain types of instability. There's profit to be made by keeping people uneducated. That's the goal for some people, even some businesses you've never even heard of. [crosstalk 00:15:12].

Jason Rigby (15:12):

... a lot of those. Yeah-

Alexander McCaig (15:12):

They're huge. You wouldn't believe the amount of people that support industry, and you'd never even know their name.

Jason Rigby (15:18):

I mentioned the S&P 500. Just go on there and look at the 500 companies [crosstalk 00:15:21] ... go through the names and see how many you know.

Alexander McCaig (15:22):

Yeah. Go look through those and then look at the ones you don't know. And then look at the private list of registered companies. I don't know. People are not very clear with what they're incentivized by. I can read documents and I can say yeah, you're a public company, but what is it that you are really trying to do? Be straightforward about it. We're being straight forward. It's not that hard to be honest.

Jason Rigby (15:49):

Are you executing for humanity? Is your systems that are in place in your business ... The ultimate outcome, is to leave this world a better place when this company is done? Or is it to just generate profit forever?

Alexander McCaig (16:04):

Then people will say, "Well, we created these ball bearings so people could drive to work. We did the right thing." Or, "We created a lot of money, generated a large profit-

Jason Rigby (16:13):

For jobs.

Alexander McCaig (16:14):

... and that got back to jobs. Right. Just because there were job, doesn't mean you solved an issue that's really facing us. The job doesn't heal issues with identity.

Jason Rigby (16:27):

Yeah [crosstalk 00:16:28].

Alexander McCaig (16:27):

With segregation. It doesn't lead to an egalitarian view of things.

Jason Rigby (16:33):

Yeah. If you have a 50-board member and 48 of them are white, older males.

Alexander McCaig (16:39):

Yeah. I'm glad Lockheed Martin is making all these jets and they have 50,000 people underneath and working on this. But what are you doing in sense of unifying? You guys create, no offense, weapons. What you do is a function of economics and defense in wartime. And I know you've made other technologies, but what is it about your primary focus to say, let's look at climate stability. Let's look at global peace, public health, economic access, educational access. Educational things regarding ... I don't know, government transparency. You have all this high technology. Why is it not being used to unify people? You literally create something that separates. It's like, "We have the highest technology. We're better than you." Just in what you're doing is already ... It's not-

Jason Rigby (17:37):

Yeah. And philosophically, we can look at Lockheed Martin, Boeing, any of those big ... Raytheon, those big defense contractors. But philosophically, they can take the view of a samurai. [crosstalk 00:17:51] A samurai is always seeking peace, but I have the tools and the ability to be able to-

Alexander McCaig (17:57):

Cut down-

Jason Rigby (17:58):

F you up really quick. Yeah-

Alexander McCaig (18:01):

And so, that mindset is already contradictory. It's like, "I want to create peace. Peace happens through unification, not by saying, 'I have the biggest stick.'" That is a archaic concept that chimpanzees use as they get into a primitive battle of dragging the stick through to say, "I'm the biggest, most macho man so everyone becomes scared. And I don't want to do anything." That doesn't unify. Fear doesn't unify. Just because you have all these great tools and you're saying, "Oh, we're defending the planet we view with our satellites." All of these things do not bring us together and help solve these primary things that are happening over here.

Jason Rigby (18:32):

Yeah. What if Lockheed Martin turned around and said, "We're going to become the world leader for peace."

Alexander McCaig (18:38):

Yeah. Why is it that-

Jason Rigby (18:39):

"But we make things that if something goes down, we're going to make sure that everyone's safe."

Alexander McCaig (18:45):

Can I ask you just like some question here? Why is it that Raytheon can put a missile-tracking system in a jet ... A jet flying in one direction at 600 miles an hour. I got a missile going 1,200 miles an hour in the opposite direction. And I can have this thing trace this? Literally, in the nineties, I was just like, "Bop! Oh, look at that. I'm tracing this object moving." We can do all that, that sort of high technology. Taking analytics from it and movement in flight, but I can't clean up trash out of a river? This is a joke. You're worried about tracking missiles? Why don't you track trash? It's moving at the speed of sloth, not the speed of sound. You see what I'm saying? What is the real focus here? And then you look at it. It's like, we just make a lot of money making weapons. And I'm only using them as an example. We can do it for a lot of things. Putting people into buckets is its own little weapon.

Jason Rigby (19:38):

Right.

Alexander McCaig (19:42):

Segregating people is a weaponization of society.

Jason Rigby (19:46):

Yeah. But I liked the ending in this article, The 15 Ways to Give Social Media Companies Personal Data, when it said, "letting yourself be grouped."

Alexander McCaig (19:53):

Yeah.

Jason Rigby (19:54):

Notice where it put the responsibility?

Alexander McCaig (19:55):

Letting yourself. You and I always hammer the point of self responsibility. It's not for us to change these companies. They need to change themselves. And it would be helpful if they listened to us, but you need to take a stance as the person generating that information, using these systems. You needed to say, "I want control over my data. I want to receive economic gain from it. I want to share it towards causes I care about, because I want to change these things." It has to be important to you. You have to take responsibility in it. And if you can educate yourself on the matter of how you're getting used, hosed, raked through the coals, whatever you want to call it, then you're going to be like, "Wow! I'm shook. I need to do something about this. And I have all the power to do so. And now I know that there's a tool available to do it. And it's only going to take me 30 seconds to get started on it."

Jason Rigby (20:48):

And the way to get started?

Alexander McCaig (20:50):

Is you go to T-A-R-T-L-E.C-O. That is tartle.co.

Jason Rigby (20:55):

Tartle.co. Sign up. Help change the world. Look at the Big Seven. I'm going to repeat them real quick. Climate stability, educational access, human rights, global peace, public health, government, corporate transparency and economic equalization.

Alexander McCaig (21:06):

Yes, and shout out to Lockheed Martin, I really do enjoy satellites.

Jason Rigby (21:09):

Hey, look what I got on my bookshelves all around.

Alexander McCaig (21:13):

Listen, all this stuff's cool.

Jason Rigby (21:14):

Fighter jets and stuff. I just hope they don't get used. I love the technology. Like I said, like a samurai. What was the Great Art of War? I forgot his name. [crosstalk 00:21:27]. Yeah, Sun Tzu, yeah. He was that way too. He said the greatest warrior is one that doesn't fight.

Alexander McCaig (21:31):

And, no joke. How many wartime technologies get transitioned over to something that does an MRI scan or whatever the hell it might be?

Jason Rigby (21:39):

Yeah, right. Yeah.

Alexander McCaig (21:39):

I get it.

Jason Rigby (21:41):

But your view can't be, we make weapons and make a lot of money.

Alexander McCaig (21:45):

Yeah.

Jason Rigby (21:46):

That's the wrong-

Alexander McCaig (21:47):

Time for that part of society to phase out by-

Jason Rigby (21:51):

In the process of making weapons, we've helped the world because our focus is peace. Have this advancement, this advancement, through that. Yeah. We're using this ability to have defense, because, unfortunately, there are bad people in the world and we have to make sure that we take care of that swiftly and with justice to protect the rest of humanity. But, in the meantime, our focus is not to use these. But as we're studying and learning and scientific, all of our scientists know that their ability to be able to ... As we create this missile and we create these new technology, we're going to take each and every part of that and focus it through a lens of, what world problem can we solve?

Alexander McCaig (22:36):

What can we solve with it?

Jason Rigby (22:37):

Now you have a different philosophy.

Alexander McCaig (22:39):

Why can't they launch a missile that just fertilizes huge amounts of land.

Jason Rigby (22:43):

Plants trees.

Alexander McCaig (22:45):

Like [inaudible 00:22:46] blows up and seeds fly everywhere.

Jason Rigby (22:47):

That'd be really cool. Oh, no! Here comes another seed bomb! Wow!

Alexander McCaig (22:54):

Look at the Titan missile! We're going to have a couple of hundred, thousand trees by next year. Do you you what I mean?

Jason Rigby (22:58):

Yeah. It's so awesome. We better be done.

Alexander McCaig (23:01):

See ya.

Speaker 1 (23:01):

Thank you for listening to TARTLEcast with your hosts Alexander McCaig and Jason Rigby. Where humanity steps into the future and source data defines the path. What's your data worth?